Want to wade into the snowy surf of the abyss? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid: Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(Credit and/or blame to David Gerard for starting this. This was a bit late - I was too busy goofing around on Discord)

Today in autosneering:

KEVIN: Well, I’m glad. We didn’t intend it to be an AI focused podcast. When we started it, we actually thought it was going to be a crypto related podcast and that’s why we picked the name, Hard Fork, which is sort of an obscure crypto programming term. But things change and all of a sudden we find ourselves in the ChatGPT world talking about AI every week.

https://bsky.app/profile/nathanielcgreen.bsky.social/post/3mahkarjj3s2o

Obscure crypto programming term. Sure

Follow the hype, Kevin, follow the hype.

I hate-listen to his podcast. There’s not a single week where he fails to give a thorough tongue-bath to some AI hypester. Just a few weeks ago when Google released Gemini 3, they had a special episode just to announce it. It was a defacto press release, put out by Kevin and Casey.

Sunday afternoon slack period entertainment: image generation prompt “engineers” getting all wound up about people stealing their prompts and styles and passing off hard work as their own. Who would do such a thing?

https://bsky.app/profile/arif.bsky.social/post/3mahhivnmnk23

@Artedeingenio

Never do this: Passing off someone else’s work as your own.

This Grok Imagine effect with the day-to-night transition was created by me — and I’m pretty sure that person knows it. To make things worse, their copy has more impressions than my original post.

Not cool 👎

Ahh, sweet schadenfreude.

I wonder if they’ve considered that it might actually be possible to get a reasonable imitation of their original prompt by using an llm to describe the generated image, and just tack on “more photorealistic, bigger boobies” to win at imagine generation.

Eliezer is mad OpenPhil (EA organization, now called Coefficient Giving)… advocated for longer AI timelines? And apparently he thinks they were unfair to MIRI, or didn’t weight MIRI’s views highly enough? And doing so for epistemically invalid reasons? IDK, this post is a bit more of a rant and less clear than classic sequence content (but is par for the course for the last 5 years of Eliezer’s content). For us sane people, AGI by 2050 is still a pretty radical timeline, it just disagrees with Eliezer’s imminent belief in doom. Also, it is notable Eliezer has actually avoided publicly committing to consistent timelines (he actually disagrees with efforts like AI2027) other than a vague certainty we are near doom.

Some choice comments

I recall being at a private talk hosted by ~2 people that OpenPhil worked closely with and/or thought of as senior advisors, on AI. It was a confidential event so I can’t say who or any specifics, but they were saying that they wanted to take seriously short AI timelines

Ah yes, they were totally secretly agreeing with your short timelines but couldn’t say so publicly.

Open Phil decisions were strongly affected by whether they were good according to worldviews where “utter AI ruin” is >10% or timelines are <30 years.

OpenPhil actually did have a belief in a pretty large possibility of near term AGI doom, it just wasn’t high enough or acted on strongly enough for Eliezer!

At a meta level, “publishing, in 2025, a public complaint about OpenPhil’s publicly promoted timelines and how those may have influenced their funding choices” does not seem like it serves any defensible goal.

Lol, someone noting Eliezer’s call out post isn’t actually doing anything useful towards Eliezer’s goals.

It’s not obvious to me that Ajeya’s timelines aged worse than Eliezer’s. In 2020, Ajeya’s median estimate for transformative AI was 2050. […] As far as I know, Eliezer never made official timeline predictions

Someone actually noting AGI hasn’t happened yet and so you can’t say a 2050 estimate is wrong! And they also correctly note that Eliezer has been vague on timelines (rationalists are theoretically supposed to be preregistering their predictions in formal statistical language so that they can get better at predicting and people can calculate their accuracy… but we’ve all seen how that went with AI 2027. My guess is that at least on a subconscious level Eliezer knows harder near term predictions would ruin the grift eventually.)

Yud:

I have already asked the shoggoths to search for me, and it would probably represent a duplication of effort on your part if you all went off and asked LLMs to search for you independently.

The locker beckons

I’m a nerd and even I want to shove this guy in a locker.

There is a Yud quote about closet goblins in More Everything Forever p. 143 where he thinks that the future-Singularity is an empirical fact that you can go and look for so its irrelevant to talk about the psychological needs it fills. Becker also points out that “how many people will there be in 2100?” is not the same sort of question as “how many people are registered residents of Kyoto?” because you can’t observe the future.

Popular RPG Expedition 33 got disqualified from the Indie Game Awards due to using Generative AI in development.

Statement on the second tab here: https://www.indiegameawards.gg/faq

When it was submitted for consideration, representatives of Sandfall Interactive agreed that no gen AI was used in the development of Clair Obscur: Expedition 33. In light of Sandfall Interactive confirming the use of gen AI art in production on the day of the Indie Game Awards 2025 premiere, this does disqualify Clair Obscur: Expedition 33 from its nomination.

The latest poster who is pretty sure that Hacker News posts critical of YCombinator and their friends get muted like on big corporate sites (HN is open that they do a lot of moderation, but some is more public than others; this guy is not a fan of Omarchy Linux) https://マリウス.com/the-mysterious-forces-steering-views-on-hacker-news/

@CinnasVerses it’s almost as if the people running ycombinator had some sort of vested interest in a particular framing of tech stories

People on Hacker News have been posting for years about how positive stories about YC companies will have (YC xxxx) next to their name, but never the negative ones

Maciej Ceglowski said that one reason he gave up on organizing SoCal tech workers was that they kept scheduling events in a Google meeting room using their Google calendar with “Re: Union organizing?” as the subject of the meeting.

It’s a power play. Engineers know that they’re valuable enough that they can organize openly; also, as in the case of Alphabet Workers Union, engineers can act in solidarity with contractors, temps, and interns. I’ve personally done things like directly emailing CEOs with reply-all, interrupting all-hands to correct upper management on the law, and other fun stuff. One does have to be sufficiently skilled and competent to invoke the Steve Martin principle: “be so good that they can’t ignore you.”

I wonder what would have happened if Ceglowski had kept focused on talks and on working with the few Bay Area tech workers who were serious about unionizing, regulation, and anti-capitalism. It seemed like after the response to his union drive was smaller and less enthusiastic than he had hoped, he pivoted to cybersecurity education and campaign fundraising.

One of his warnings was that the megacorps are building systems so a few opinionated tech workers can’t block things. Assuming that a few big names will always be able to hold back a multibilliondollar company through individual action so they don’t need all that frustrating organizing seems unwise (as we are seeing in the state of the market for computer touchers in the USA).

maciej cegłowski is also a self-serving arse, so i’d take anything he says with a large grain of salt.

His talks are great, but his time as a union organizer and campaign fundraiser left him so disillusioned that he headed in a reactionary direction (and neglected the business that lets him throw himself at random projects). He is a case study why getting on twitter is a very bad idea.

he’s also a self-important arse, which is kinda problematic when one tries to do organising. (one of the very important part is that doing the union work is not a social club, and you may need to work with and accommodate people whom you personally very much dislike.)

I have never met Ceglowski or talked to anyone involved in his movements. These days I am doing some local things rather than join in the endless smartphone arguments about “everyone should be an activist and organizer!” vs. “I tried that and the things that make me good at writing long essays about politics / viral social media posts make me bad at organizing to elect a city counselor.”

again, my point here is that cegłowski is an unreliable narrator; you should not build an opinion based on his anecdotes (or his transphobia).

@CinnasVerses the valley is rife with these “wisdom is your dump stat” folks. can invert a binary tree on a whiteboard but might accidentally drown themselves in a rain puddle

(Detaches whiteboard from wall, turns whiteboard upside-down)

Inverted, motherfuckers

famous last words, “we are currently clean on opsec”

@CinnasVerses @cap_ybarra 10x developers, ladies, gentlemen, and enbies. The best and brightest.

10x developers, 0.1x proletariat.

The stakhanov we have at home

This had slipped under the radar for me

https://www.reddit.com/r/backgammon/comments/1k8nlay/new_chapter_for_extreme_gammon/

After 25 years, it is time for us to pass the torch to someone else. Travis Kalanick, yes the Uber founder, has purchased Gammonsite and eXtreme Gammon and will take over our backgammon products (he has a message to the community below)

:(

Took me a second to realize you were actually talking about backgammon, and not using gammon (as in the british angry ham) as a word replacement.

This makes me wonder, how hard is backgammon? As in computability wise, on the level of chess? Go? Or somewhere else?

Backgammon is “easier” than chess or go, but it has dice, so it not (yet) been completely solved like checkers. I think only the endgame (“bearing off”) has been solved. The SOTA backgammon AI using NNs is better than expert humans but you can still beat it if you get lucky. XG is notable because if you ever watch high stakes backgammon on youtube, they will run XG side by side to show when human players make blunders. That’s how I learned about it anyway.

Thanks! Had not really thought about how dice would mess with the complexity of things tbh.

A story of no real substance. Pharmaicy, a Swedish company, has reportedly started a new grift where you can give your chatbot virtual, “code-based drugs”, ranging from 300,000 kr, for weed code, to 700,000 kr, cocaine.

editor’s note: 300000 swedish krona is approximately 328,335.60 norwegian krone. 700000 SEK is about 766116.40.

Thanks for the conversion. Real scanlation enjoyers will understand.

nor… norway!!!

To be more clear:

300000 swedish krona = ~672 690 czech koruna

700000 swedish krona = ~1 569 611 czech koruna

to be even clearer:

300k swedish krona = ~54k bulgarian lev = ~119k uae dirham

700k swedish krona = ~126k bulgarian lev = ~277k uae dirham

So, I’m taking this one with a pinch of salt, but it is entertaining: “We Let AI Run Our Office Vending Machine. It Lost Hundreds of Dollars.”

The whole exercise was clearly totally pointless and didn’t solve anything that needed solving (like every other “ai” project, i guess) but it does give a small but interesting window into the mindset of people who have only one shitty tool and are trying to make it do everything. Your chatbot is too easily lead astray? Use another chatbot to keep it in line! Honestly, I thought they were already doing this… I guess it was just to expensive or something, but now the price/desperation curves have intersected

Anthropic had already run into many of the same problems with Claudius internally so it created v2, powered by a better model, Sonnet 4.5. It also introduced a new AI boss: Seymour Cash, a separate CEO bot programmed to keep Claudius in line. So after a week, we were ready for the sequel.

Just one more chatbot, bro. Then prompt injection will become impossible. Just one more chatbot. I swear.

Anthropic and Andon said Claudius might have unraveled because its context window filled up. As more instructions, conversations and history piled in, the model had more to retain—making it easier to lose track of goals, priorities and guardrails. Graham also said the model used in the Claudius experiment has fewer guardrails than those deployed to Anthropic’s Claude users.

Sorry, I meant just one more guardrail. And another ten thousand tokens capacity in the context window. That’ll fix it forever.

Why is WSJ rehashing six month old whitepapers? Slow news week /s

Anything new vs the last time it popped up? https://www.anthropic.com/research/project-vend-1

EDIT:

In mid-November, I agreed to an experiment. Anthropic had tested a vending machine powered by its Claude AI model in its own offices and asked whether we’d like to be the first outsiders to try a newer, supposedly smarter version.

Whats that word for doing the same thing and expecting different results?

Hah! Well found. I do recall hearing about another simulated vendor experiment (that also failed) but not actual dog-fooding. Looks like the big upgrade the wsj reported on was the secondary “seymour cash” 🙄 chatbot bolted on the side… the main chatbot was still claude v3.7, but maybe they’d prompted it harder and called that an upgrade.

I wonder if anthropic trialled that in house, and none of them were smart enough to break it, and that’s what lead to the external trial.

The monorail salespeople at Checkmarx have (allegedly) discovered a new exploit for code extruders.

The “attack”, titled “Lies in the Loop”, involves taking advantage of human-in-the-loop “”“safeguards”“” to create fake dialogue prompts, thus tricking vibe-coders into running malicious code.

It’s interesting to see how many ways they can find to try and brand “LLMs are fundamentally unreliable” as a security vulnerability. Like, they’re not entirely wrong, but it’s also not something that fits into the normal framework around software security. You almost need to treat the LLM as though it were an actual person not because it’s anywhere near capable of that but because the way it fits into the broader system is as close as IT has yet come to a direct in-place replacement for a human doing the task. Like, the fundamental “vulnerability” here is that everyone who designs and approves these implementations acts like LLMs are simultaneously as capable and independent as an actual person but also have the mechanical reliability and consistency of a normal computer program, when in practice they are neither of those things.

Does Checkmarx have any relation to infamous ring-destroying pro wrestler Cheex? https://prowrestling.fandom.com/wiki/Mike_Staples

If not, perhaps they should seek an endorsement deal!

That would be such a “we didnt know the dotcom bubble was popping a month later” move.

Ben Williamson, editor of the journal Learning, Media and Technology:

Checking new manuscripts today I reviewed a paper attributing 2 papers to me I did not write. A daft thing for an author to do of course. But intrigued I web searched up one of the titles and that’s when it got real weird… So this was the non-existent paper I searched for:

Williamson, B. (2021). Education governance and datafication. European Educational Research Journal, 20(3), 279–296.

But the search result I got was a bit different…

Here’s the paper I found online:

Williamson, B. and Piattoeva, N. (2022) Education Governance and Datafication. Education and Information Technologies, 27, 3515-3531.

Same title but now with a coauthor and in a different journal! Nelli Piattoeva and I have written together before but not this…

And so checked out Google Scholar. Now on my profile it doesn’t appear, but somwhow on Nelli’s it does and … and … omg, IT’S BEEN CITED 42 TIMES almost exlusively in papers about AI in education from this year alone…

Which makes it especially weird that in the paper I was reviewing today the precise same, totally blandified title is credited in a different journal and strips out the coauthor. Is a new fake reference being generated from the last?..

I know the proliferation of references to non-existent papers, powered by genAI, is getting less surprising and shocking but it doesn’t make it any less potentially corrosive to the scholarly knowledge environment.

Relatedly, AI is fucking up academic copy-editing.

One of the world’s largest academic publishers is selling a book on the ethics of artificial intelligence research that appears to be riddled with fake citations, including references to journals that do not exist.

Came across this gem with the author concluding that a theoretical engineer can replace SaaS offerings at small businesses with some Claude and while there is an actual problem highlighted (SaaS offerings turning into a disjoint union of customer requirements that spiral complexity, SaaS itself as a tool for value extraction) the conclusion is just so wrong-headed.

Today, in fascists not understanding art, a suckless fascist praised Mozilla’s 1998 branding:

This is real art; in stark contrast to the brutalist, generic mess that the Mozilla logo has become. Open source projects should be more daring with their visual communications.

Quoting from a 2016 explainer:

[T]he branding strategy I chose for our project was based on propaganda-themed art in a Constructivist / Futurist style highly reminiscent of Soviet propaganda posters. And then when people complained about that, I explained in detail that Futurism was a popular style of propaganda art on all sides of the early 20th century conflicts… Yes, I absolutely branded Mozilla.org that way for the subtext of “these free software people are all a bunch of commies.” I was trolling. I trolled them so hard.

The irony of a suckless developer complaining about brutalism is truly remarkable; these fuckwits don’t actually have a sense of art history, only what looks cool to them. Big lizard, hard-to-read font, edgy angular corners, and red-and-black palette are all cool symbols to the teenage boy’s mind, and the fascist never really grows out of that mindset.

It irks me to see people casually use the term “brutalist” when what they really mean is “modern architecture that I don’t like”. It really irks me to see people apply the term brutalist to something that has nothing to do with architecture! It’s a very specific term!

“Brutalist” is the only architectural style they ever learned about, because the name implies violence

ModRetro, retro gaming company infamous for being helmed by terrible person Palmer Luckey, has put out a version of their handheld made with “the same magnesium aluminum alloy as Anduril’s attack drones” (bluesky commentary, the linked news article is basically an ad and way too forgiving).

So uhh… they’re not beating those guilt by association accusations any time soon.

Time Extension’s decided to wash their hands of ModRetro after seeing the news. Good call on their part.

@BlueMonday1984 @sailor_sega_saturn the colors on that Warcrime Boy ™ kinda remind me of historic (wartime) flags of Germany 😳

a version of [the ModRetro Chromatic] made with “the same magnesium aluminum alloy as Anduril’s attack drones”

Obvious moral issues aside, is that even an effective marketing point? Linking yourself to Anduril, whose name is synonymous with war crimes and dead civs, seems like an easy way to drive away customers.

I’m holding out for the Lockheed-branded Atari Lynx clone thats made from surplus R9X knife missile parts.

I have my eye on the McDonnell Douglas-branded Neo Geo, which will be a value-engineered trijet and a brick of explosive all while only running the version of worm that was on the nokia brick phone.

Be careful my pal says the engines tend to fall off!

yeah, I dunno how large the union of retro game handheld enthusiasts and techfash lickspittles is.

The before-games-went-woke sector was large enough to bring us gamergate so there’s definitely a sizable available crossover.

This doesn’t really feel performative enough for that crowd, though. Like, if it included some kind of horribly racist engraving or even just a company logo then maybe, but I don’t think anyone’s gonna trigger the libs by just playing their metal not-gameboy.

Obviously, if they’ve got magnesium alloy to divert into Game Boy ripoffs, the attack drone contract must not be going particularly well

i think that some of these are meant to be disposable

In the case of loitering munitions (also known as kamikaze drones, or suicide drones), you’d be correct - by design, they’re intended to crash into their target before blowing them up. Some reportedly do have recovery options built-in, but that’s only to avoid wasting them if they go unused.

even in case where it isn’t that, it has no pilot so at minimum even highly capable drone is more disposable than plane, which is like the entire point

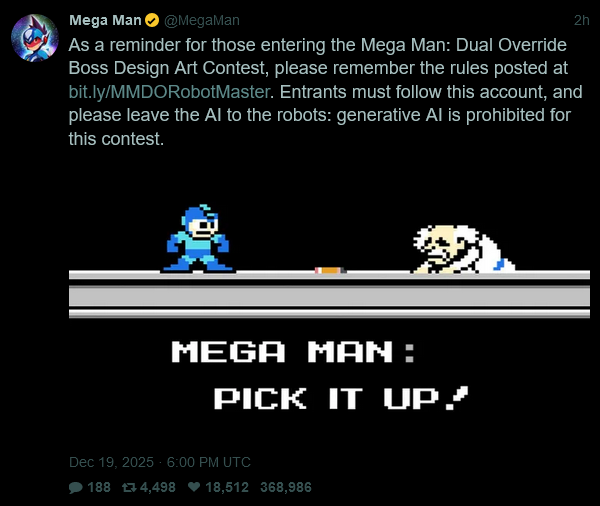

For more lighthearted gaming-related news, Capcom fired off a quick sneer, whilst (indirectly) promoting their their latest Mega Man game:

(alt text: “As a reminder for those entering the Mega Man: Dual Override Boss Design Art Contest, please remember the rules posted at bit.ly/MMDORobotMaster. Entrants must follow this account, and please leave the AI to the robots: generative AI is prohibited for this contest.”)

This was a prime opportunity to trot out bad box art megaman and they didn’t take it

OT: Lurasidone is neat stuff.

Never tried it. Do you have bipolar? I do. My psych is reluctant to put me on an antipsychotic again because Abilify made me gain 30 pounds and become prediabetic.

Yeah, BP2. Replacing risperidone. Metformin can help with antipsych weight gain fwiw, some really fascinating studies out there.

Yea I’m BP1. I switched to lamotrigine and lost all the weight and my fasting glucose went from 113 down to 85