BlueMonday1984

he/they

- 19 Posts

- 118 Comments

11·17 hours ago

11·17 hours agoYou must sign in to view this post.

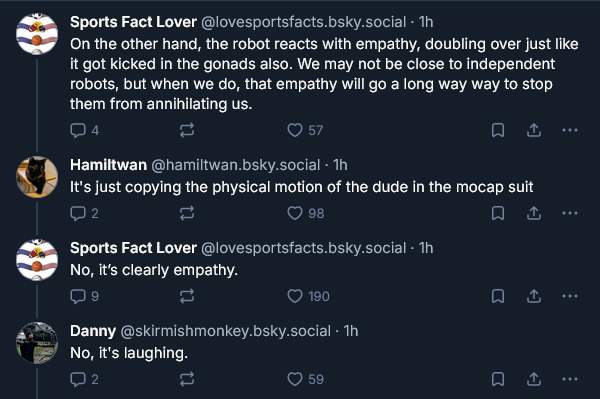

My lack of a Bluesky account spares me again. Thankfully, I have Skyview.social to ensure my eyes are scarred anyway (seriously, do we have to fight another world war over if Nazis are bad or not, it shouldn’t take the blood of tens of millions to make that clear)

Also found a nice summary of our current moment in the replies:

(alt: “I am old enough to remember when we all agreed Nazis were bad”)

7·22 hours ago

7·22 hours agoAnother ChatGPT fatality’s just hit the news - California teen Sam Nelson has died of a drug overdose, after two years of trusting the chatbot for drug advice.

12·3 days ago

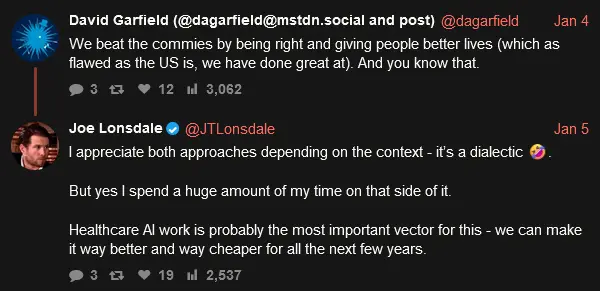

12·3 days agoFound something rare today: an actual sneer from Mike Masnick, made in response to Reuters confusing lying machines with human beings:

2·3 days ago

2·3 days agoBig brain: this ai stuff isn’t going away, maybe I should invest in defense contractors that’ll outfit the US’s invasion of Taiwan…

Considering Recent Events™, anyone outfitting America’s gonna be making plenty off a war in Venezuela before the year ends.

13·4 days ago

13·4 days agoA journalist attempts to ask the question “Why Do Americans Hate A.I.?”, and shows their inability to tell actually useful tech from lying machines:

Bonus points for gaslighting the public on billionaires’ behalf:

8·4 days ago

8·4 days agoGot a sneer which caught my attention: Guest Gist: 2026, Our Already Rotting Future

4·5 days ago

4·5 days agoThere’s also skyview.social, which I personally use since I am not interested in signing up.

11·6 days ago

11·6 days agoGood for them. Not quite abandoning the project and deleting it, but its a good move from them nonetheless.

8·9 days ago

8·9 days agoFoz Meadows brings a lengthy and merciless sneer straight from the heart, aptly-titled “Against AI”

1·9 days ago

1·9 days agoAlternate (likely harebrained) idea: sell the fediverse as a national security thing, and try to get a defence contract out of it

Reducing government/military reliance on tech corps would reduce the leverage they (and their CEOs) have over them, reducing the risk of, say, a repeat of that time Elon Musk fucked over Ukraine).

6·12 days ago

6·12 days agoPike’s blow-up has made it to the red site, and its brought promptfondlers out the woodwork.

(Skyview proxy, for anyone without a Bluesky account)

51·14 days ago

51·14 days agoDefaults are pretty good - didn’t need to do much to feel comfortable.

601·14 days ago

601·14 days agoyou_were_the_chosen_one.gifI jumped ship to Librewolf a year and a half ago (after seeing Mozilla steal people’s data and sell it to advertisers), and I’m pretty fucking thankful for that. For anyone looking to leave Mozilla to rot, I highly recommend it.

6·14 days ago

6·14 days agoGoogle’s lying machine lies about fiddler Ashley MacIsaac, leading to his concert being cancelled.

13·15 days ago

13·15 days agoMain thing I knew Karl for is losing a defamation lawsuit to vexatious litigant par excellence Billy Mitchell, after he falsely blamed Billy for driving speedrun YouTuber Apollo Legend to kill himself, refused to retract his claims despite a complete lack of evidence, then misrepresented the lawsuit as being over Billy’s well-substantiated cheating allegations whilst raising money on GoFundMe.

Jobst’s past as a pick-up artist (plus some racist Discord posts from him) would resurface in the wake of his loss, which goes some way to explain why the anti-woke shit went “unnoticed”.

11·16 days ago

11·16 days agoStarting this Stubsack off, here’s Baldur Bjarnason lamenting how tech as a community has gone down the shitter.

SFGATE columnist Drew Magary has decreed “The time has come to declare war on AI”, and put out a pretty solid sneer in the process. Bonus points for openly sneering billionaire propaganda service Ground News, too.